If you’re using 403 or 404 errors to restrict Googlebot’s crawling rate, it is essential that you read this entire blog post.

This article will explain why using 404 or 403 errors to limit Googlebot’s rate is not recommended. Additionally, it will provide tips on the best practices for managing Googlebot’s crawl rate on your website.

Without wasting time further, let’s explore this topic in detail below. However, before moving forward, let us first understand, what 4xx errors are and how they can be utilized for rate-limiting Googlebot.

What are 4xx client errors?

4xx errors, also known as client-side errors, occur when a server is unable to fulfill a client’s request.

This means that a page that once existed on a website may no longer be live and has not been redirected elsewhere.

One of the most common 4xx errors is 404, also known as the ‘Not Found‘ error. However, in addition to 404, there are many other 4xx errors that you may encounter.

These errors are:-

- 400 Bad Request

- 401 Unauthorized

- 402 Payment Required

- 403 Forbidden

- 404 Not Found

- 405 Method Not Allowed

- 406 Not Acceptable

- 407 Proxy Authentication Required

- 408 Request Timeout

- 409 Conflict

- 410 Gone

- 411 Length Required

- 412 Precondition Failed

- 413 Payload Too Large

- 414 URI Too Long

- 415 Unsupported Media Type

- 416 Range Not Satisfiable

- 417 Expectation Failed

- 418 I’m a teapot

- 421 Misdirected Request

- 422 Unprocessable Entity

- 423 Locked

- 424 Failed Dependency

- 426 Upgrade Required

- 428 Precondition Required

- 429 Too Many Requests

- 431 Request Header Fields Too Large

- 451 Unavailable For Legal Reasons

How 4xx errors are used to limit the crawl rate?

When a website owner or content delivery network (CDN) notices that Googlebot is crawling their site too quickly and causing issues, they might consider using 403s or 404s to slow down the crawl rate.

To do this, they might set up their web server to return a 403 or 404 error code in response to Googlebot’s requests, which would cause Googlebot to slow down and reduce its crawling rate.

In short, Googlebot reduces the crawl rate in return for a 403 or 404 error code received from the client.

Why 4xx is not good for rate-limiting Googlebot?

Except for 429, all 4xx errors indicate that the client’s request was wrong in some sense.

However, it does not indicate that there is something wrong going on with the server.

In simple words, 4xx errors are for client-side errors not for errors related to the server.

If you use 403 or 404 codes to limit Googlebot’s crawl rate, you risk having your web content removed from Google search or having Googlebot crawl content you don’t want to be indexed.

This situation may get more worsen if you serve your robots.txt file with a 4xx error. In this case, Googlebot will ignore your robot.txt file and crawl accordingly.

Rate-limiting Googlebot prevents Google from crawling your page and updates its index accordingly. This may result in updating the content lately.

For example, if you’re running an online store and use 4xx errors to limit Googlebot’s crawl rate, it may not be able to update its index with the latest content in a timely manner.

This can result in outdated product information, such as incorrect pricing and descriptions, being displayed in search results.

Best way to rate limit Googlebot

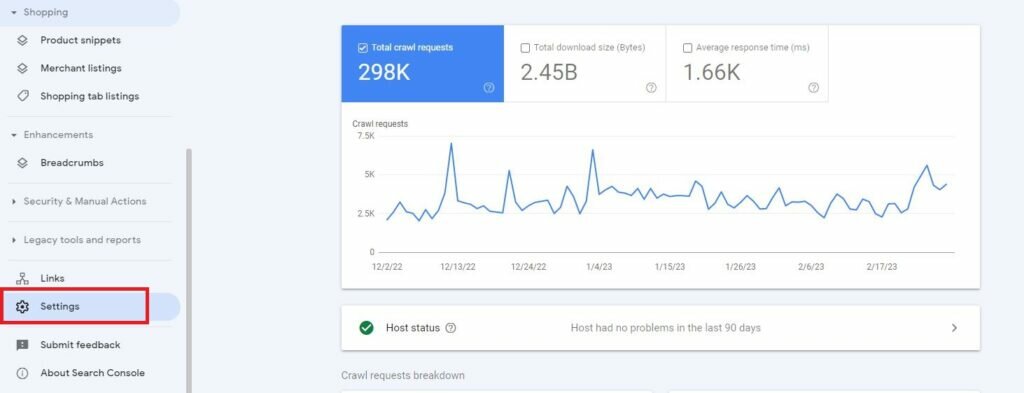

The most effective way to manage Googlebot’s crawl rate is by using the Google Search Console. Within the Google Webmaster tools, you can limit the rate at which Googlebot crawls your website.

Follow the steps below to reduce the crawl rate:

- Log in to your Google Search Console account and select the website you want to manage.

- Click on ‘Crawl stats under the ‘Settings’ menu.

- Select the ‘Limit Google’s maximum crawl rate‘ option.

- Choose your preferred crawl rate using the slider.

- To save the changes, click the “Save” button.

By following these steps, you can effectively manage Googlebot’s crawl rate and ensure that your website performs optimally.

By default, only the Crawl Stats page is visible to all webmasters. However, the crawl rate setting page is visible to a few webmasters.

You will find this stats page under the Setting page from the left navigational menu of the Google Search Console account. It will look something like this below.

If you want to access the Crawl Rate Settings for your website, you can submit a special request to reduce the crawl rate. However, it’s important to note that you cannot request an increase in the crawl rate.

FAQs on Rate Limiting Googlebot

A. 4xx error indicates that the page is no more live or else redirected elsewhere. In simple words, it indicates the client-side errors in the given URL.

A. Limiting Crawling means asking Search Engine crawl not to crawl webpages to avoid overloading of the server.

A. 4xx errors in response to Googlebot requests will slow down its crawl rate.

A. This is because using 4xx may results in Google ignoring your setting and may index the contentt that you don’t want to be or vice versa.

A. The best way to rate limiting Googlebot is using Google Webmaster Accountntnt. Logged in to your webmaster account and limit the crawl rates under the ‘Setting’ menu from the left navigational menu.

A. Googlebot can crawl the first 15MB of an HTML file or supported text-based file. For more information, check this link.

Over to you

If Googlebot is consuming your bandwidth and you’re planning to limit its crawl rate. Please do not use 4xx error types to limit it.

Instead, use specific HTTP status codes like 500, 503, or 429 to indicate to Googlebot that it needs to slow down its crawl rate.

For a beginner, limiting the crawl rate using Google webmaster is the best option. It is simple to use and no programming or technical knowledge requires to use it.

At last, if you have any questions or need help in implementing these methods, feel free to contact me through the contact page or else leave your message below in the comment box.

News Source: Don’t use 403s or 404s errors to rate limiting Googlebot